Thoughts on the future of Programming

GitHub Copilot is a $100M revenue business, and it seems like every other week, a new Large Language Model (LLM) that beats some benchmark is released. Rabbit R1 and the Humane AI pin hardware received harsh criticisms and negative reviews. Large tech companies that are at their all-time high in market capitalization are still laying off employees. The present of programming is a strange place. What does the future hold?

Looking at trends

CPU

According to Moore’s Law, transistor density should double every 18 months. In December 2023, Intel CEO Pat Gelsinger stated the improvements are slowing down to a 3 year cycle.

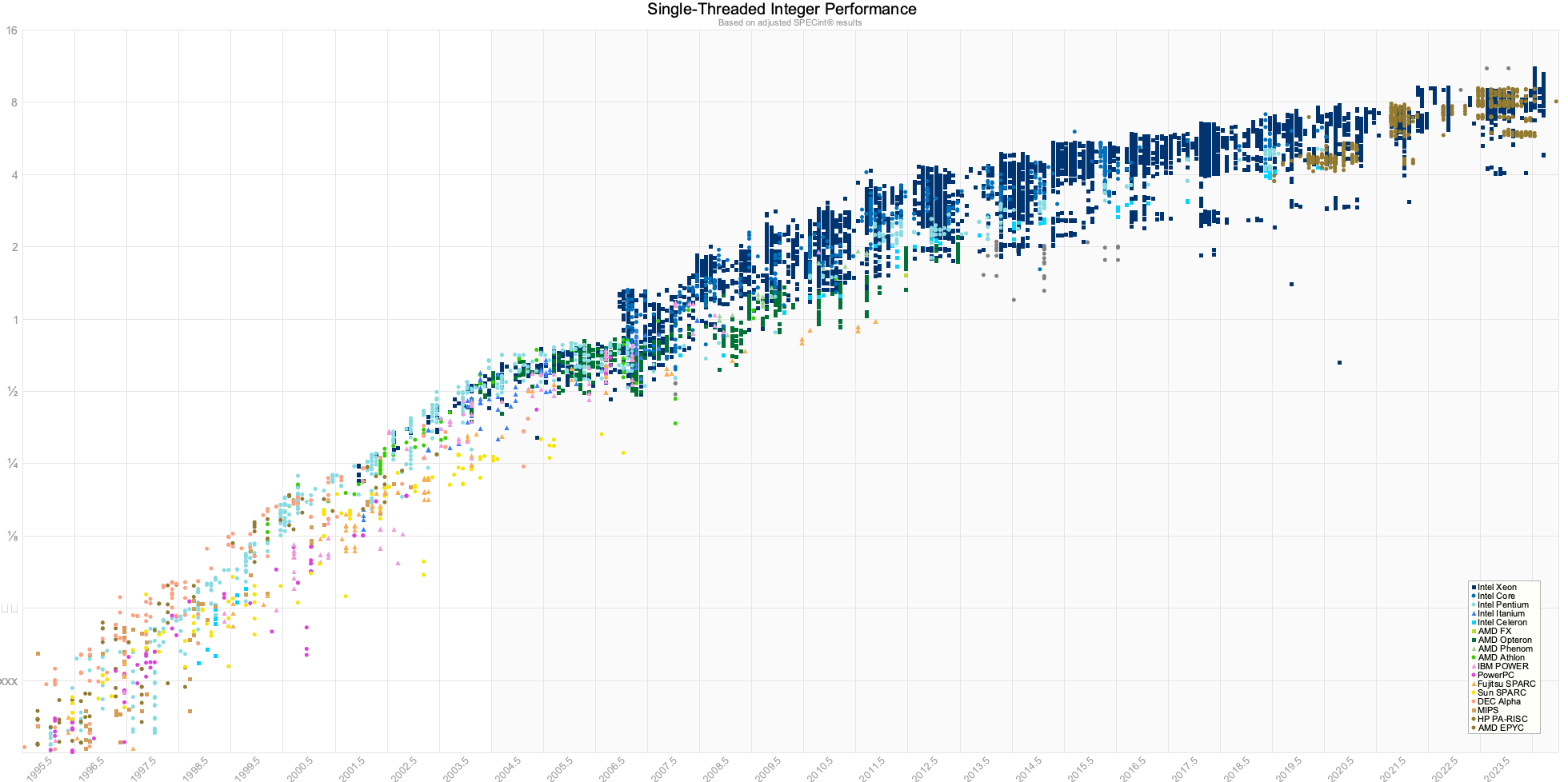

Not only that, but single-core performance for integer operations has definitely slowed down.

Multi-core improvements are improving at a faster rate, partly due to the increase in core count. This keeps the benchmarks going up, but most software aren’t taking advantage of this. Modern web apps are still single-threaded. Developers will have to start thinking about parallelism more than ever before to take advantage of these improvements.

RAM

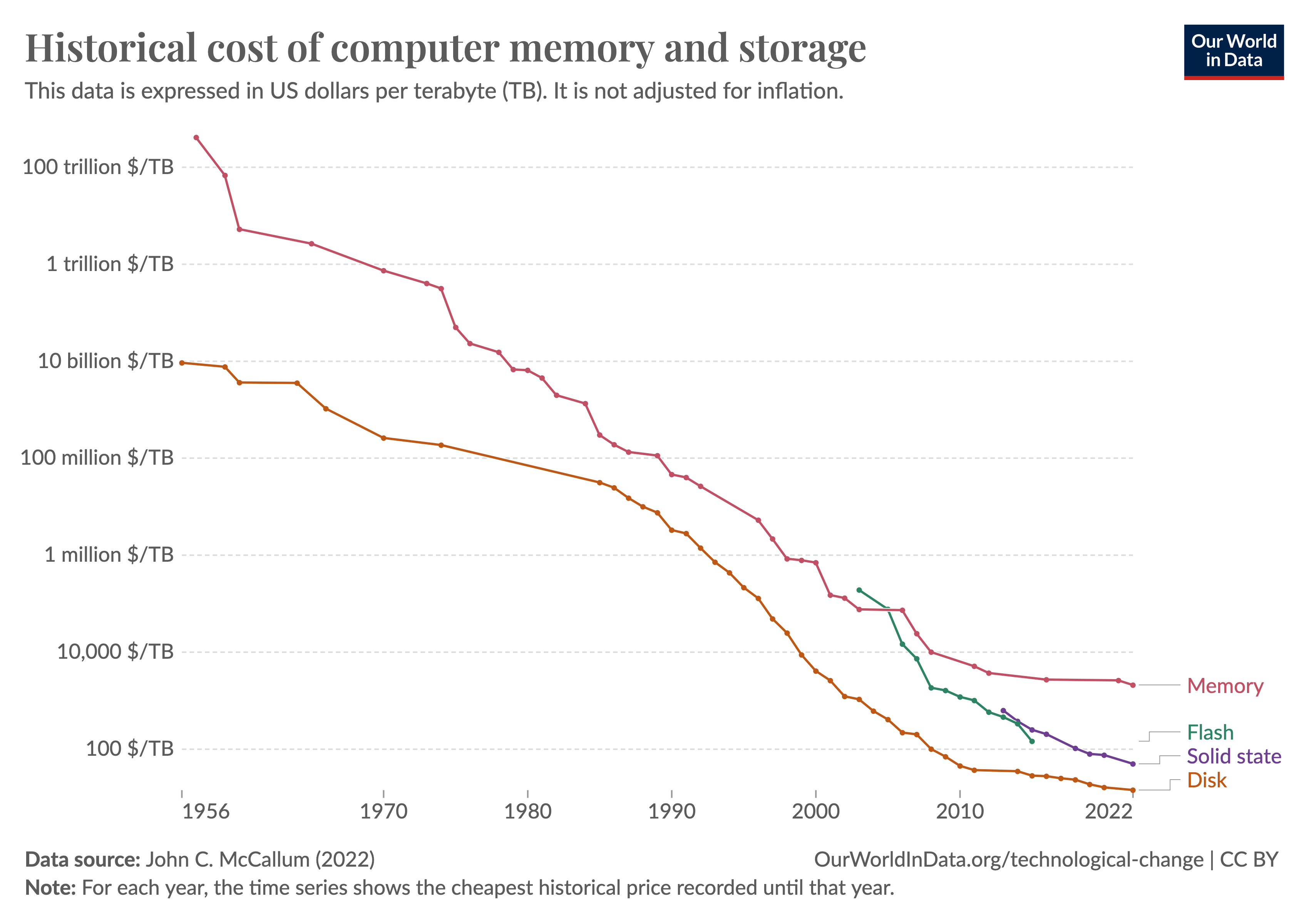

RAM prices per GB has been relatively stagnant for the past 8 years. Due to technical challenges, we’re not seeing the same rate of improvement as we did in the past. EUV lithography is only now starting to be adopted, so we’re still a few years away from seeing the benefits of that.

Even then, we will eventually hit the physical limits of transistors and capacitors. We may start adding more RAM slots to a motherboard, but latency will increase as distance, memory controller complexity, and power consumption increases.

Therefore RAM is going to be the bottleneck for the foreseeable future.

Interestingly, Apple’s strategy of integrated memory between the CPU and GPU is paying off in unexpected ways. With 128GB of integrated memory in their top-of-the-line models, it can fit some of the largest local LLMs. Compared to $30k for a H100 Nvidia card with 80GB of memory, this is a bargain.

If I may speculate, local LLMs will actually make the situation worse, as each application bundled with a local LLM will consume more memory than one that doesn’t. Users will have to choose between having more applications open, or having more powerful applications, or resort to using the cloud.

Ecosystems

The common feedback for AI hardware currently is “This should have been an app.”, but the companies making them aren’t stupid. They realize that as an app, they would not be able to access the data they need that is required to provide the best experience for their users. For example, reading your browser history to provide better search results.

My prediction is that we will see more and more hardware that is tied to a specific ecosystem, and we will see more and more ecosystems in the short term. As it stands currently, writing an Android and iOS app is already a huge effort, so either cross-platform frameworks will become more popular, or we will see a more fragmented market.

My bet is that web technologies will dominate further, as AI hardware companies realize that the fastest way to build up an ecosystem is through the web with its large developer base and cross-platform capabilities.

What does this mean for programming?

We will have to start thinking about parallelism, memory usage and resource constraints more than ever before. The days of throwing more hardware at a problem are coming to an end.

Lower level languages and languages with interesting memory management features will become more popular as developers will seek tools that allow them to better utilize the now limited resources. Rust and Zig are already seeing a lot of interest, and I think this trend will continue.

Cross-platform frameworks and web technologies will become more popular as developers try to reach as many users as possible with as little effort as possible. Native applications will still be necessary for performance-critical applications, but the majority of applications will be web-based.